The University of Maryland’s Office of Student Conduct received 73 referrals for artificial intelligence-related academic integrity violations during the 2022-23 academic year.

Out of these referrals, 13 cases have been dismissed or the student was found not responsible. Ten cases are still pending as of Sept. 21. The student conduct office issued 105 academic sanctions across the 50 remaining, confirmed AI-related cases. One of those confirmed AI-related cases was associated with the Universities at Shady Grove.

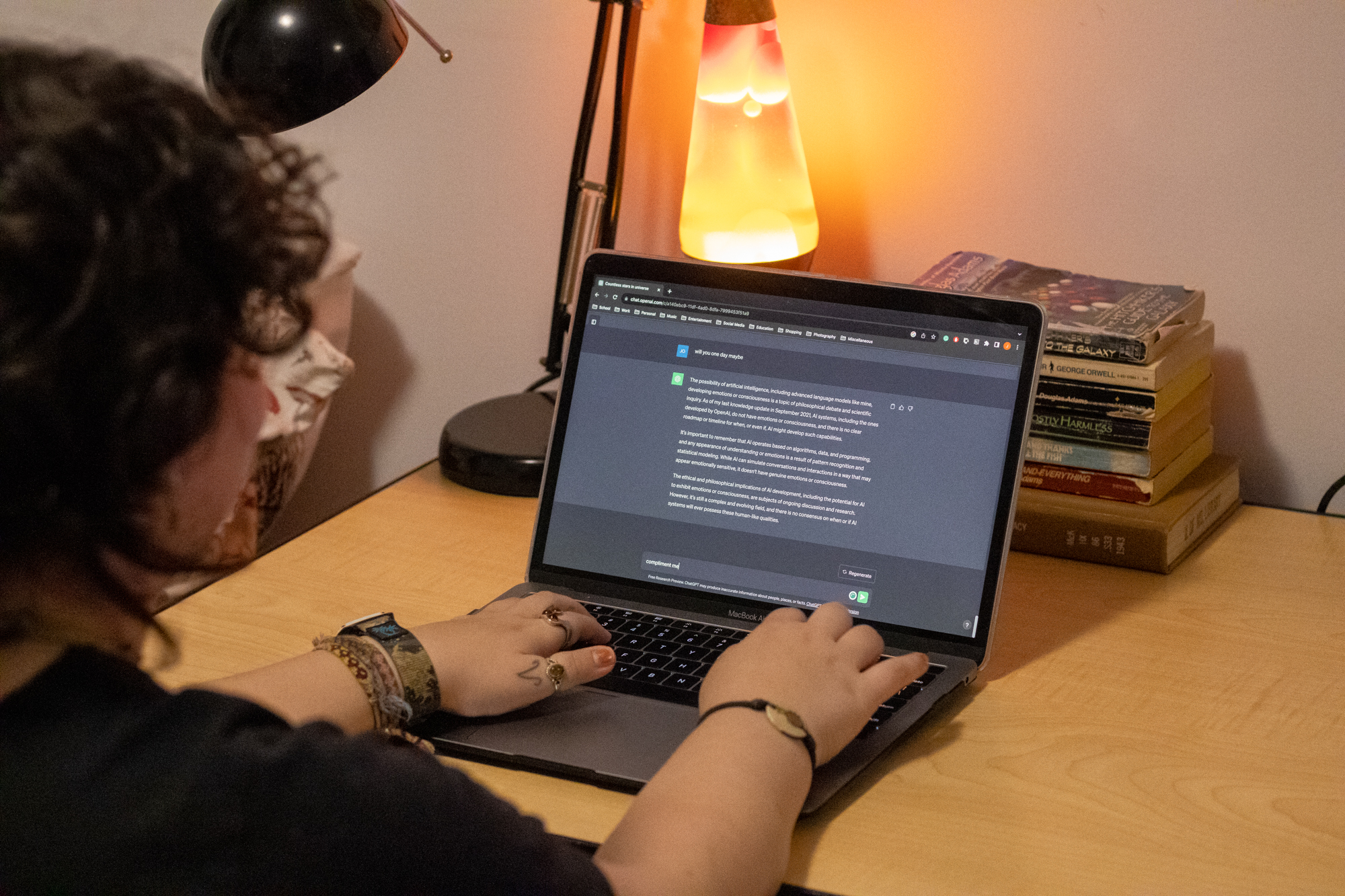

The rise in AI-related academic sanctions comes as AI chatbot ChatGPT marks one year since its release in November 2022. The technology’s capabilities, such as quickly generating unique content, have generated controversy around its use in academic settings due to concerns over plagiarism and learning loss.

James Bond, this university’s student conduct director, said his department first began to suspect students of using ChatGPT in fall 2022 as they learned more about generative AI.

“I was concerned about how it could be used and the intentionality behind its use with students,” Bond said.

As of Sept. 21, 10 of the 50 confirmed AI-related cases stemmed from the behavioral and social sciences college — the highest number from any individual college at this university. The information studies college had the second-highest number of confirmed cases — nine — despite having about 65 percent fewer enrolled students than the behavioral and social sciences college in fall 2022.

In response to these preliminary concerns, university senior vice president and provost Jennifer King Rice emphasized the threat that AI-based large language models such as ChatGPT pose to education in an email to this university’s faculty members in March.

This university will need to determine “when the use of AI tools should be deterred and how to respond to inappropriate uses,” Rice wrote in the email.

In the email, Rice also announced new artificial intelligence guidance provided by this university’s teaching and learning transformation center.

The latest guidance for faculty members, updated in August, includes explicitly stating AI expectations in syllabi, encouraging multiple drafts for assignments, determining best practices for integrating AI into class activities and referring suspected AI usage to the student conduct office.

Despite these early warnings, the student conduct office received 679 referrals in the 2022-23 academic year — a nearly 9 percent increase in total referrals from 2021-22, according to Bond. The office also issued more than 1,300 sanctions in the 2022-23 academic year, marking an 11 percent increase from the total number of sanctions in the 2021-22 academic year.

The 679 referrals consisted of at least 50 confirmed AI-related cases.

AI-related sanctions accounted for more than eight percent of the student conduct office’s total academic sanctions in the 2022-23 academic year.

[UMD President Darryll Pines hails possibilities of AI in State of the Campus]

Education policy assistant professor Jing Liu said he has seen a rise in ChatGPT-related academic integrity violations.

“I do think now there is a surge of plagiarism using ChatGPT,” Liu said.

This prevalence of AI has led many faculty members at this university to alter their teaching philosophies.

Liu, who is teaching a graduate research methods course this semester, noted that he now emphasizes a student’s thought process in assignments, rather than only the final result due to ChatGPT’s ability to generate answers.

Like Liu, computer science professor Hal Daumé highlighted how AI such as GPT-4 — a paid version of ChatGPT — can solve programming assignments for his artificial intelligence course, CMSC421.

“I am 99 percent sure that … could solve them basically perfectly,” Daumé said. “But that doesn't help students learn.”

Due to AI’s capabilities, the business school is encouraging its faculty members to emphasize higher-level problem-solving rather than tasks like summarizing information. Such “lower-order thinking” is AI’s strength, according to business school associate dean for culture and community Zeinab Karake.

Karake added that she and four other faculty members are part of a business school AI group that researches how to use AI to foster more advanced thinking skills in the classroom.

Outside of the business school group, this university invested $2.7 million to study experiential learning programs last year, according to an email Rice sent to faculty members on Sept. 7.

In addition to the increase in the number of AI-related cases, students with AI-related violations received, on average, harsher sanctions from the student conduct office.

Twenty-four students who were referred to the student conduct office for AI-related violations received an “XF” mark on their transcript, denoting failure with academic dishonesty. Nearly half of all confirmed AI-related cases carried an “XF” mark in the 2022-23 year. Less than 15 percent of all academic sanctions in 2022-23 included an “XF.”

AI-related violations do not have their own classification in this university’s academic misconduct policy.

Instead, they fall under other academic integrity violations, such as plagiarism and fabrication, according to Bond. As a result, AI-related cases are treated the same as cases from these categories.

Students who use ChatGPT without citing its usage are liable for plagiarism, and students who use ChatGPT without verifying its correctness could be held liable for fabrication, Bond added.

[Finance, management among 10 UMD majors with significant enrollment increases since 2012]

One of the challenges for the student conduct office is detecting the use of AI.

While AI-detection technologies such as Turnitin and GPTZero have emerged in recent months, there are many questions about their accuracy.

According to an April study by the Technical University of Darmstadt in Germany, the most effective AI detection tool achieved less than 50 percent accuracy in detecting usage.

The subpar accuracy could lead to false negatives, or not detecting actual uses of AI. It could also accuse a student of using AI when they did not, according to MPower professor Philip Resnik. Resnik said false positives are more harmful to students.

“I am more concerned about the potential damage that could be done to a student who legitimately did write something themselves or use ChatGPT in a way that was permitted,” Resnik said.

Using AI-detection technologies could also unfairly penalize students whose first language is not English, Daumé added.

An April study by Stanford University researchers found that AI-detection tools often look for less sophisticated English, which is more common in non-native English writers.

In a September interview with The Diamondback, university president Darryll Pines said this university has established several committees to investigate AI detection tools and hopes to continue this research moving forward.

“ChatGPT is just a tool,” Pines said. “But it is here to stay and we continue to evolve with it.”

While this university allows faculty members to use detection technologies for suspected cases, the student conduct office will only use an AI detection tool if it is used by a faculty member in deciding to refer a student to the office, Bond said.

Bond acknowledged the technology’s shortcomings. He noted that the results of the AI detector, if it is used by an instructor, will be one of many factors considered in a student conduct case. Follow-up conversations with the student are the most important part of the process, he said.

“We know that no detector is perfect and that's why we don't just base it on a detector,” Bond said.