What started out as a class project has turned into a device to make life easier for visually impaired people.

In 2012, Lee Stearns, along with other University of Maryland students, developed a prototype of a device for a graduate course called Tangible Interactive Computing. The invention, called HandSight, augments the sense of touch by using a wearable camera along with audio and haptic feedback, said Stearns, a computer science graduate student and the lead student on the project. The device reads text, patterns and images, and conveys the information back to the user.

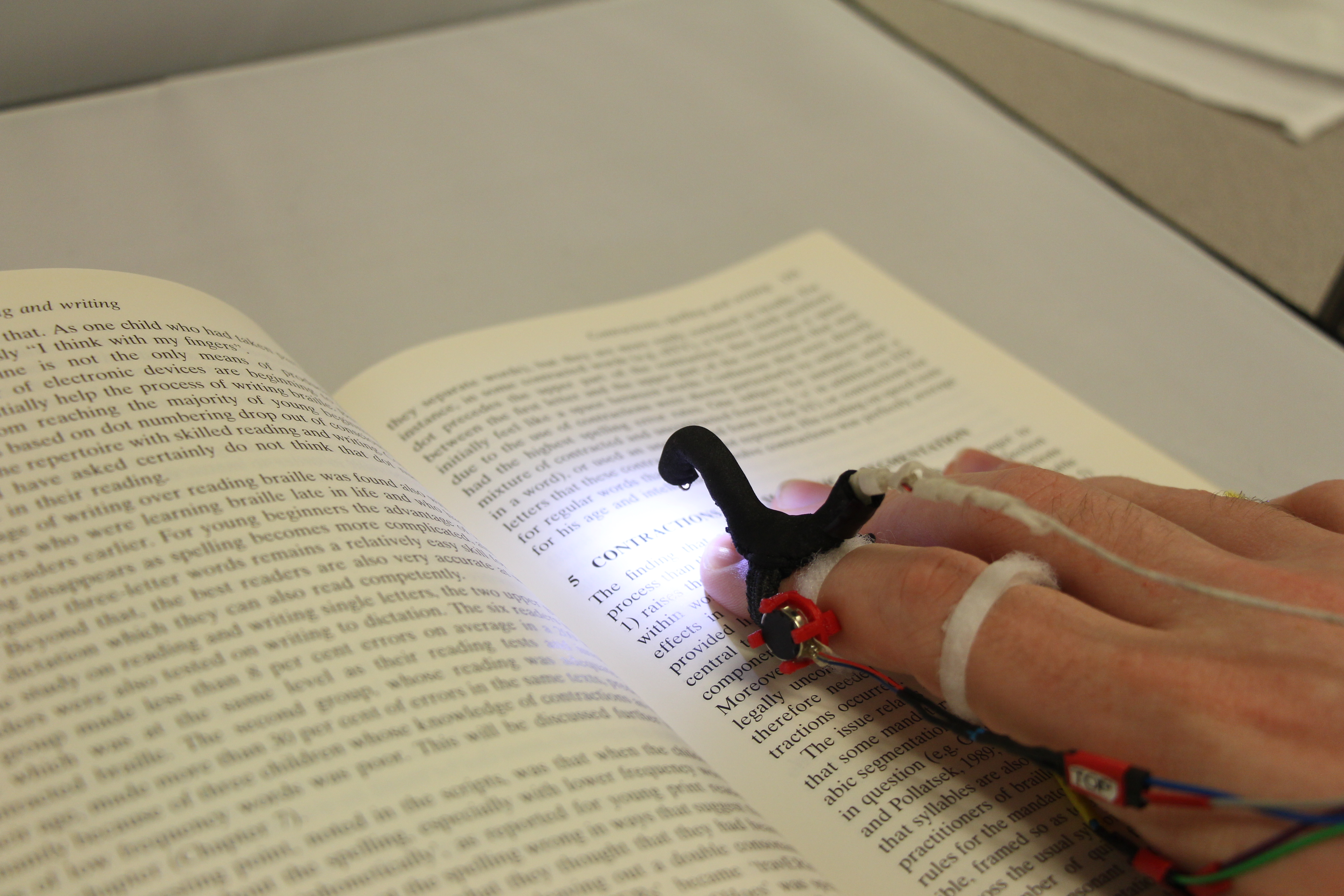

Stearns said the project has changed a lot since he helped to create the first prototype in his graduate class. The model used to be an entire glove with a small infrared reflectance sensor to detect black and white lines on a sheet of paper, but the current model straps onto an individual’s fingertip and includes a 5-millimeter camera.

“It’s nice to be working on a project that is interesting, but that is also going to be helping people,” Stearns said. “That factor makes it fairly meaningful to work on.”

[Read more: A UMD team made an app highlighting D.C. areas inaccessible to people with disabilities]

Research for HandSight is now based out of the university’s Human Computer Interaction Laboratory, located in Hornbake Library. The laboratory is dedicated to building and studying interaction technology for social purposes.

Jon Froehlich, a research team member and the computer science professor who taught the class where the project was formed, said haptic feedback technology — which applies touch sensations and controls to computer applications — uses a technique similar to what one may use to learn braille, but with printed text instead.

“The idea is to use the camera to make someone’s fingers their primary sense, which can make daily living for visual-impaired individuals easier,” Froehlich said. “People will be able to go to a restaurant and read the menu with their fingertips. This device can even help someone get dressed by conveying the texture and color of fabrics back to the user.”

With this technology, the device can sense the lightness or darkness of a surface and the distance from physical objects or obstructions, Froehlich added.

[Read more: UMD researchers’ augmented reality technology could help doctors in the operating room]

In 2014, the research team received a $1 million grant from the Defense Department for medical research, which helped to expand the project. After receiving the grant, the research team was able to add a 1-millimeter camera to its next prototype. There have been at least five prototypes to date, Stearns said, and the cameras — which are normally designed for medical purposes such as surgeries — cost about $800 each.

About 90 individuals, some who were visually impaired and some who were not, have tested the models through various user studies.

“It’s a simple but elegant design,” Yousuf Khan, a senior cell bio and genetics major, said of the current prototype. “This allows for the circumvention of braille translations in an unobtrusive way.”

Froehlich said while he wants to expand research for the device, he doesn’t see it becoming a commercial product any time soon, even though companies have reached out to him.

“Having this in stores is certainly something that is an option, but not our primary focus,” Froehlich said. “It is to better understand how we can build these systems and re-appropriate cameras to help people than to focus on selling this. But it will always be a possibility.”